The Perceptron algorithm is one of the simplest forms of a neural network used for binary classification tasks.

The Perceptron algorithm is a simple yet powerful algorithm used for binary classification tasks. It mimics the functioning of a single neuron in the human brain, making it a basic building block of neural networks.

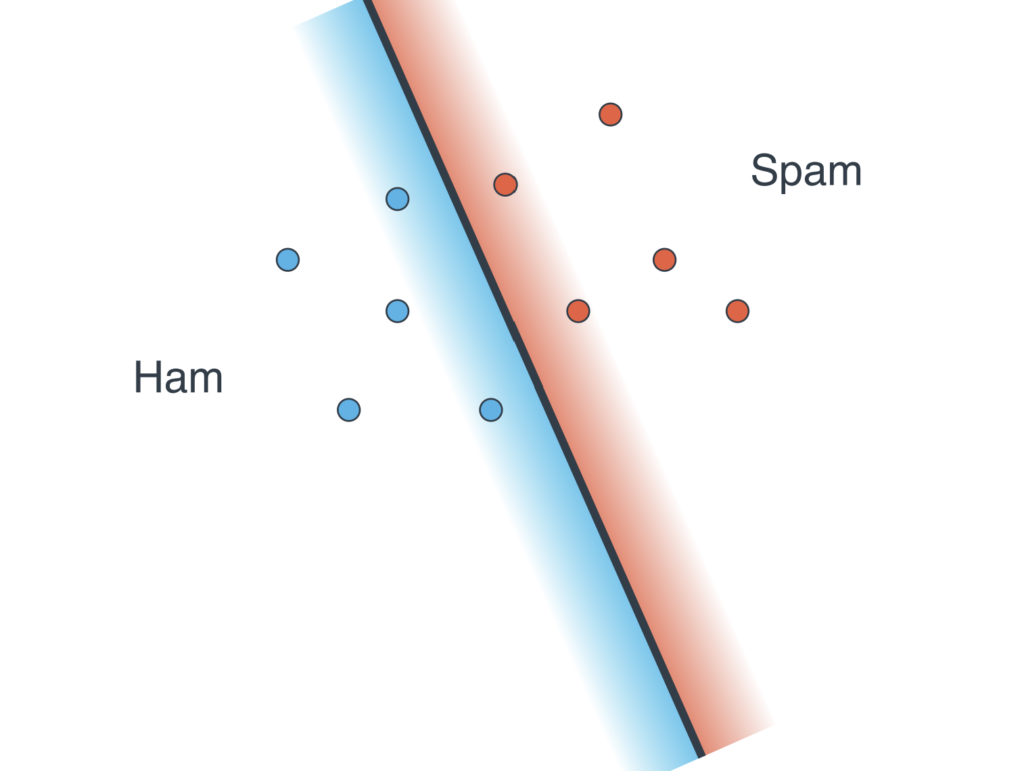

At its core, the Perceptron algorithm separates data points into two classes based on their features, represented by a decision boundary (line in 2D) in the feature space. It’s based on the concept of a single-layer neural network, where inputs are weighted and summed to produce an output.

Here’s a breakdown of its key components and workings:

- Classification:

- The Perceptron algorithm is primarily used for binary classification tasks, where it separates data points into two classes based on their features.

- Representation of Decision Boundary:

- In a two-dimensional space, the decision boundary of a Perceptron is represented by a line. In higher dimensions, it’s a hyperplane.

- How to Move the Decision Boundary (Line):

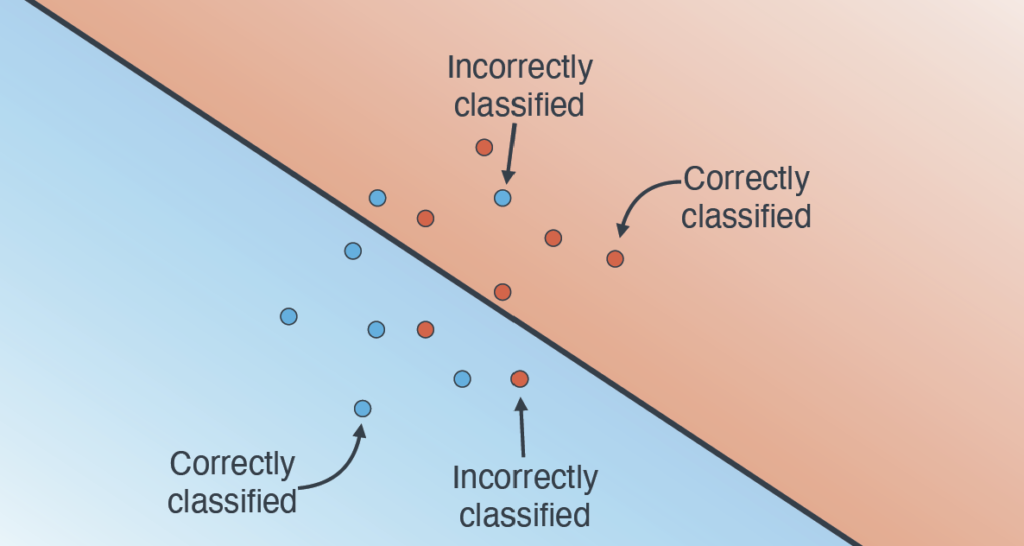

- The decision boundary (line) of a Perceptron can be moved by adjusting the weights assigned to each input feature.

- If a data point is misclassified, the weights are adjusted to bring the decision boundary closer to the misclassified point, thus improving classification accuracy.

- Rotating and Translating:

- Rotating or translating the decision boundary of a Perceptron involves adjusting the weights to change the orientation or position of the line separating the classes.

- This adjustment is done iteratively based on the errors made by the Perceptron on the training data.

- Perceptron Trick:

- The Perceptron trick is a method to update the weights of the Perceptron when it misclassifies a point.

- It involves adding the misclassified point to the current weight vector if it should have been classified positively, and subtracting it if it should have been classified negatively.

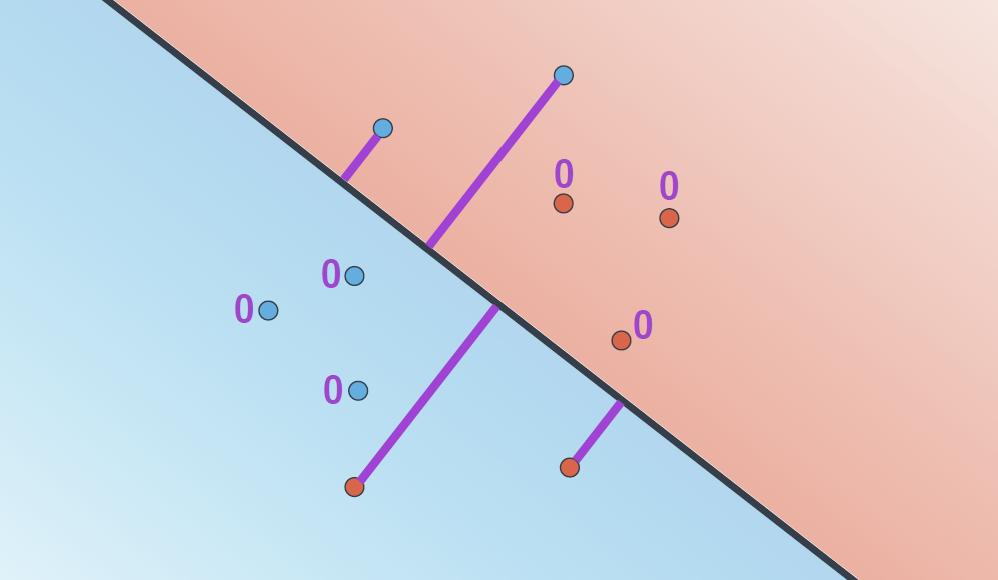

- Positive and Negative Regions:

- The positive and negative regions are the areas on either side of the decision boundary.

- Points falling on one side of the boundary are classified as belonging to one class (positive), while points on the other side are classified as belonging to the other class (negative).

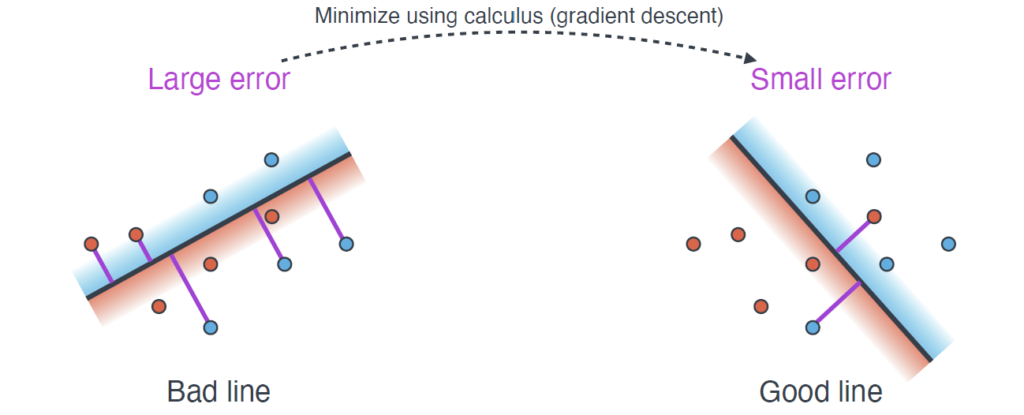

- Perceptron Error:

- The Perceptron error is the measure of misclassification. It’s the difference between the actual class of a data point and the class predicted by the Perceptron.

- The goal is to minimize this error during the training process by adjusting the weights.

- Gradient Descent:

- While the Perceptron algorithm does not directly use gradient descent, it can be seen as a precursor to more advanced algorithms that do use gradient descent for optimization.

- Gradient descent involves iteratively adjusting the weights of the Perceptron in the direction that reduces the error, using the gradient of the error function with respect to the weights.

Let’s consider a classic example of classifying fruits as either apples or oranges based on their weight and color. Using the Perceptron algorithm, we can define weights for these features and adjust them iteratively to improve classification accuracy. For instance, if a fruit is misclassified as an apple when it’s actually an orange, the weights are adjusted to correct this error.

Moving the Decision Boundary: The decision boundary, represented by a line in a two-dimensional space, separates the positive and negative regions corresponding to the two classes. By adjusting the weights assigned to each feature, the decision boundary can be moved to better classify data points. Imagine you’re drawing a line to separate apples and oranges on a scatter plot – the Perceptron algorithm does just that, albeit in a more mathematical manner.

The Perceptron Trick: When a data point is misclassified, the Perceptron trick comes into play. It involves adjusting the weights of the features to move the decision boundary closer to the misclassified point. This iterative process continues until a satisfactory level of classification accuracy is achieved.

Positive and Negative Regions: In the context of the Perceptron algorithm, the positive and negative regions refer to the areas on either side of the decision boundary. Data points falling on one side are classified as belonging to one class (positive), while those on the other side belong to the opposite class (negative).

Example: Imagine plotting the weights of apples and oranges on a graph. As you adjust the weights based on misclassifications, the decision boundary shifts, effectively separating the positive and negative regions.

Perceptron Error and Gradient Descent: The Perceptron error measures the misclassification between the predicted and actual classes of data points. The goal is to minimize this error by iteratively adjusting the weights using methods akin to gradient descent. While the Perceptron algorithm itself doesn’t directly employ gradient descent, it lays the groundwork for more advanced optimization techniques.

Conclusion

In conclusion, the Perceptron algorithm is a fundamental tool for binary classification tasks in machine learning. Its simplicity and effectiveness make it an essential concept to grasp for anyone venturing into the field. By understanding how the Perceptron algorithm works and its applications, you’re equipped with a solid foundation to explore more complex neural network architectures and algorithms.

Whether you’re classifying fruits or predicting spam emails, the Perceptron algorithm offers a versatile approach to solving binary classification problems.